Extraction of cuboids and bounding box dimensions from scanned objects using

Deep Learning methods

A wide variety of fields and applications, such as industrial manufacturing, human machine interfaces and augmented reality depend on the analysis of real-world data (object, scenes etc.). This information can be collected by 3D scanning devices and consists of a point cloud sampled on the object surfaces in the scene. The point cloud information needs to be processed in order to perform analysis such as registration, classification, segmentation and modelling.

Currently, most Deep Learning (DL) techniques in the field of computer vision are developed for 2D data images. Recently, 3D scanning devices are becoming more affordable and reliable, making a large amount of 3D data available. As a result, the interest of the 3D computer vision community to investigate DL architectures on 3D data has increased in the past years. While classification, segmentation or registration using point clouds are well studied problems, the methods for dimensions extraction of scanned objects are not mature.

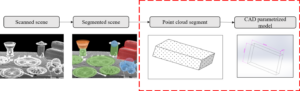

In this research, we propose a deep learning-based approach that extracts the dimensions of cuboids and bounding box from scanned objects that are represented by point clouds.

One of the major advantages of Neural Networks (NN) is their ability to generalize information by learning from data examples. Thus, the first step of this research has been to build a synthetic dataset used to train our network. Then, the second stage is to process, in a supervised manner unstructured 3D point clouds by a NN in order to predict the geometric parameters of the 3D model.

Instead of a specific and very complex regression model, we propose to use a general-purpose classification deep learning network which is transformed into a regression model. This straightforward, yet effective method produces good results for the geometric parameters extractions for 3D model reconstruction.

The algorithm has been tested on the synthetic dataset of cuboids created for the task, and its robustness to density change, noise, missing data and outliers are shown.

Moreover, in order to prove the efficacity of the algorithm to correctly extract the bounding box parameters of everyday objects we tested it on the Princeton dataset ModelNet 40.