3D Dimension Extraction from a Scanned Hand for Design and Modeling of

Hand Prosthesis using Deep-Learning Methods

ISMBE Poster Session ISMBE Poster Session |

Over three million people worldwide are arm amputees, and often need a hand prosthesis. One of the known methods for designing hand prostheses is based on fitting of a generic prosthesis design to the patient. The fitting process is according to the data from the scanned hand. Currently, dimension extraction and prosthesis design are processed manually. This is tedious, time-consuming, and inaccurate. With the development of imaging tools, modeling methods and advanced scanning technologies, the prosthesis design process has a potential to be automated efficiently.

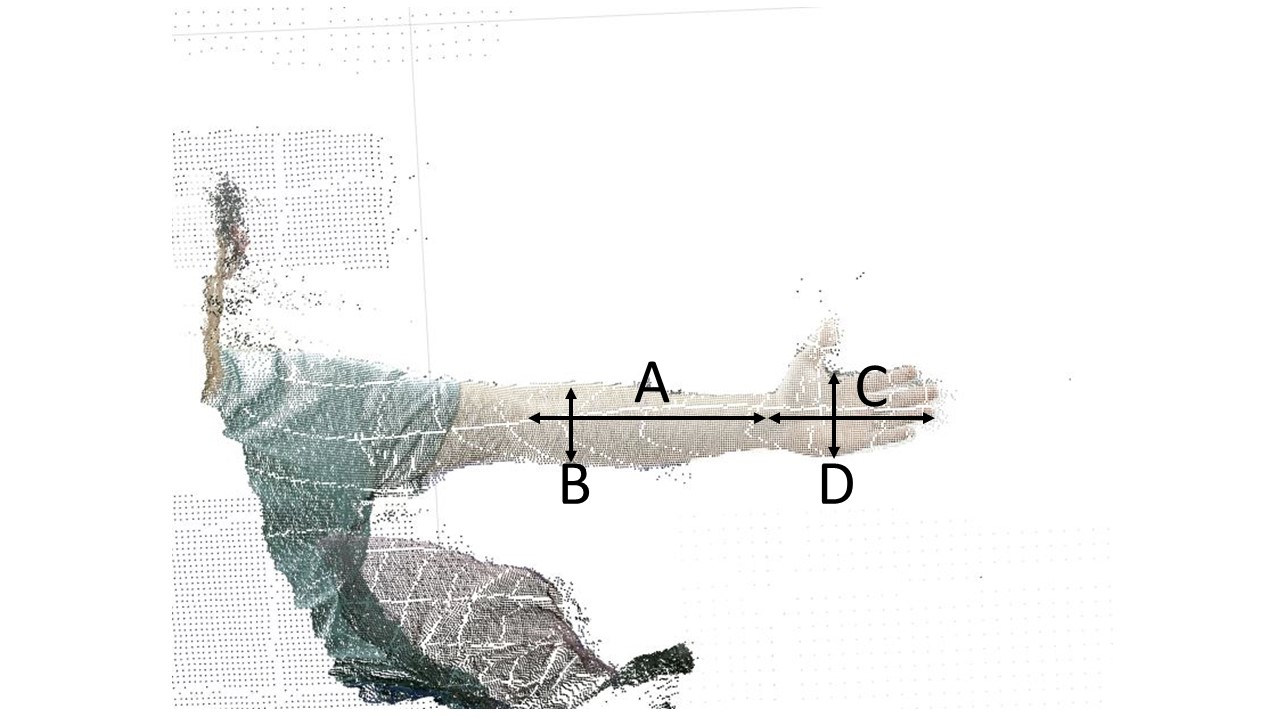

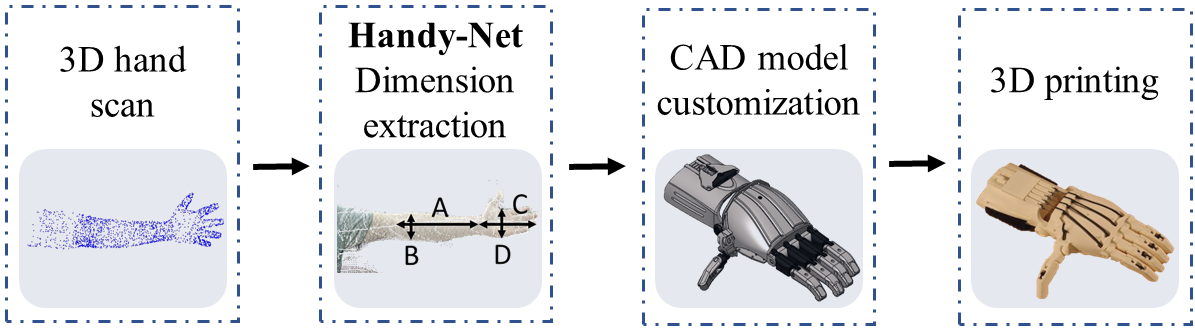

This research proposes a dimension extraction method from 3D scanned hand that enables the modeling of personalized hand-prosthesis without the designer interference. This method facilitates the procedure of adapting and fitting the prosthesis to the patient. The main stages of the fitting process include: 3D scanning of the healthy hand, processing the scanned data using a deep neural network (DNN) for dimension extraction and adjusting relevant dimensions to a 3D CAD model of a hand. The resulted CAD model can then be 3D printed with accessible and low-cost materials.

The main contribution of this research is a new method for extracting hand dimensions from 3D scanned hand using learning technique. This method improves significantly the personalized design process of a hand-prosthesis and making it cost-effective, faster, and more accessible for developing regions.

|

|

|

dimensions taken from hand scan (point cloud) |

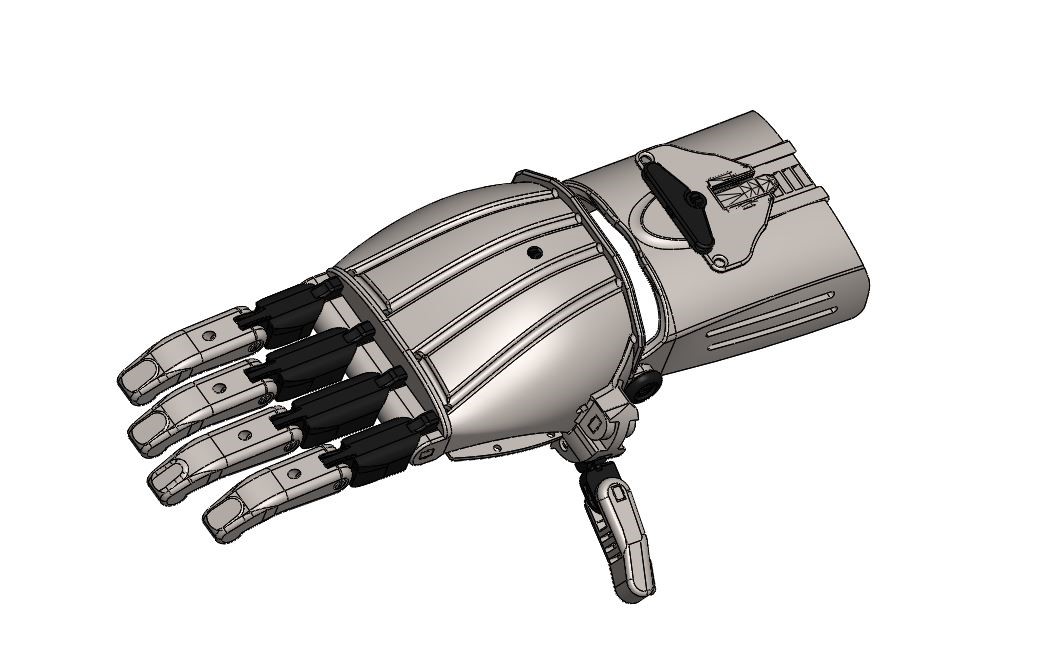

Prosthesis CAD Model |

|

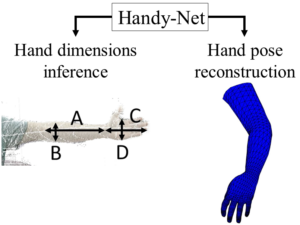

| Handy-Net Output |

|

| Prosthesis Design Pipeline |